This is the second instalment of our deep dive into the world of AB testing. To read more, you can find Part 2 here.

My name is Rok Piltaver and I’m a Senior Data Scientist at Outfit7. Recently, I was invited to speak at a conference about the A/B testing process and best practices that we developed over the years working on some of the world's most popular mobile games. I spoke about the prerequisites for a successful A/B test, the testing processes that ensure actionable results, how the A/B test infrastructure speeds up implementation and analysis, and how to avoid common pitfalls. Since the lecture wasn’t recorded, I decided to share the information in a blog post.

What is A/B testing?

A/B testing helps organizations evaluate product improvements using data and statistics, eliminating guesswork and helping them understand what makes their users tick.

At a basic level, you take an existing product (e.g. a mobile game or an advertising campaign) and change part of it to make a new variant (e.g. game difficulty, rewards generosity or frequency of in-game ads). Then, you measure which variant performs better. The goal is to improve the product and business performance, or to learn something new about the product and its users.

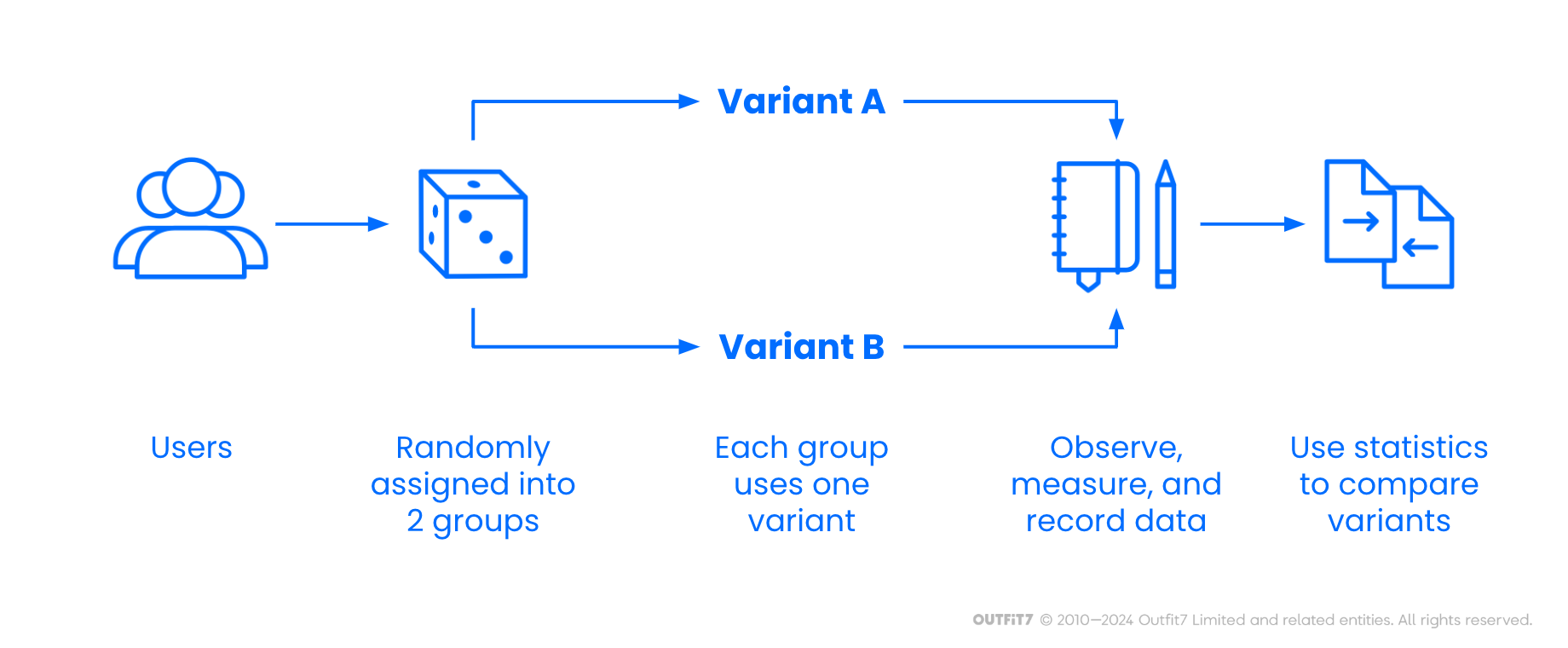

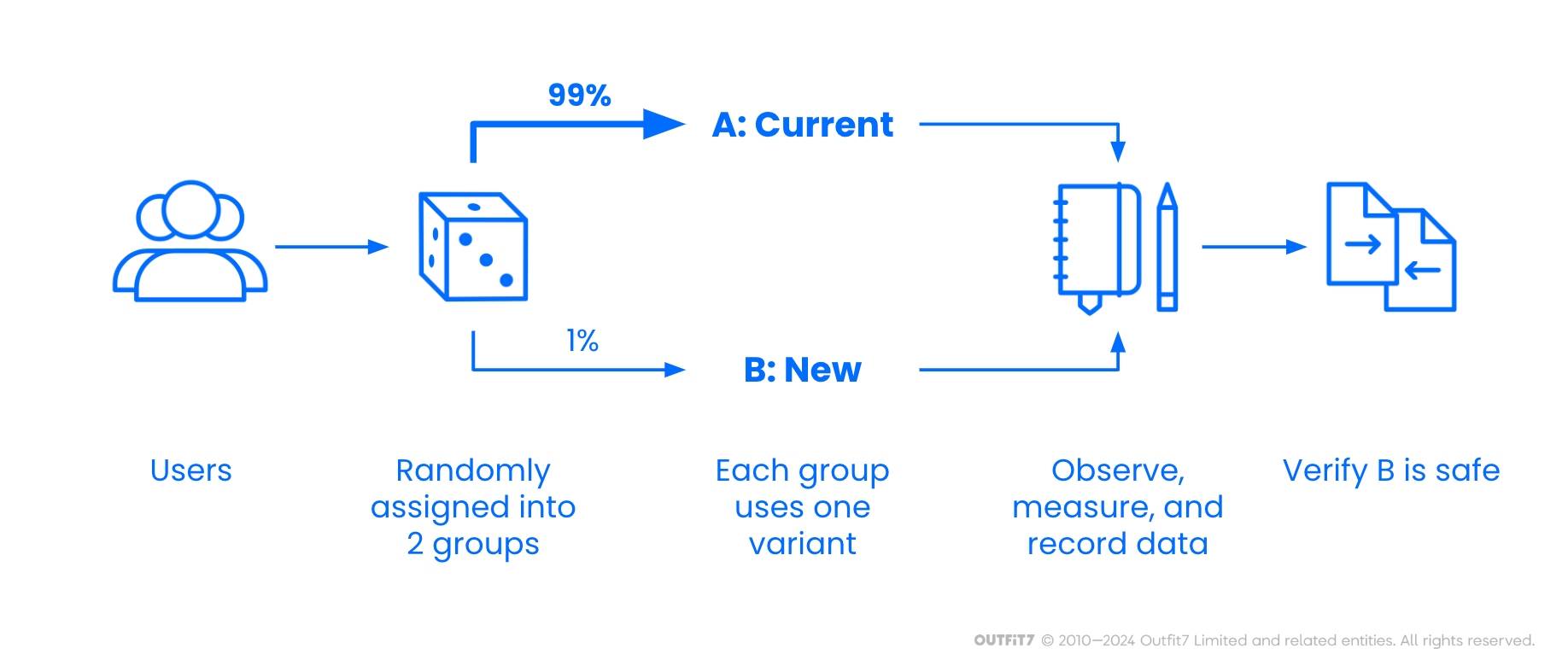

During A/B testing, users are randomly assigned into one of two groups. The first group is called the control group and they use the original product, while the second group uses the new variant. Then, user interaction with the product variants are observed, measured and recorded, along with overall business outcomes.

Once enough data is collected, statistical methods are used to judge how each variant performed according to various KPIs, such as engagement time, user retention, player progression speed, revenue from ads and in-app purchases or users’ lifetime value. Multiple variants can be tested at the same time, but each is always compared to the control group.

Finally, A/B test results are summarized and presented to decision-makers (in our case the product team, paid user acquisition team or executives) in order to help them make data-driven business decisions.

Examples

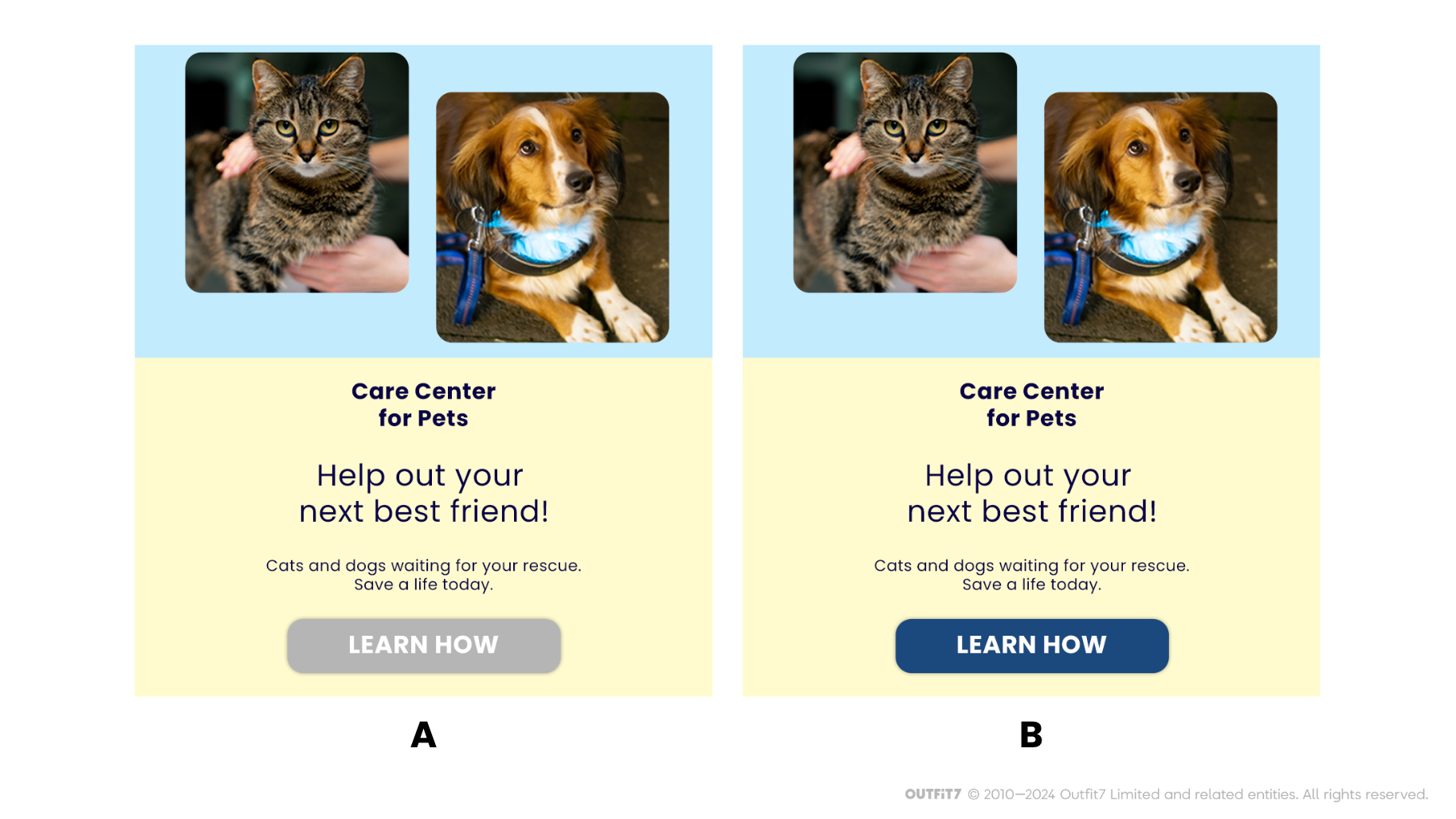

Let’s start with an example of an ineffective A/B test that often appears in learning materials – and even in practice. Below, you see two creative variants of the same ad. Although this illustrates what an A/B test might look like, such an A/B test would most likely end up being counterproductive.

In my experience, it would be impossible to measure any statistically significant difference in performance between the two creative variants below. People who care about the Care Center for Pet would click the “Learn how” button regardless of its color, while people who are not interested would dismiss the ad even if the button is blue.

As a result, no useful information can be extracted from the test data and all the work required to run and analyze the test would go to waste. However, conducting an A/B test with alternate call-to-action text geared towards impacting viewers’ emotions (e.g. “Save Leo!”) might make more sense.

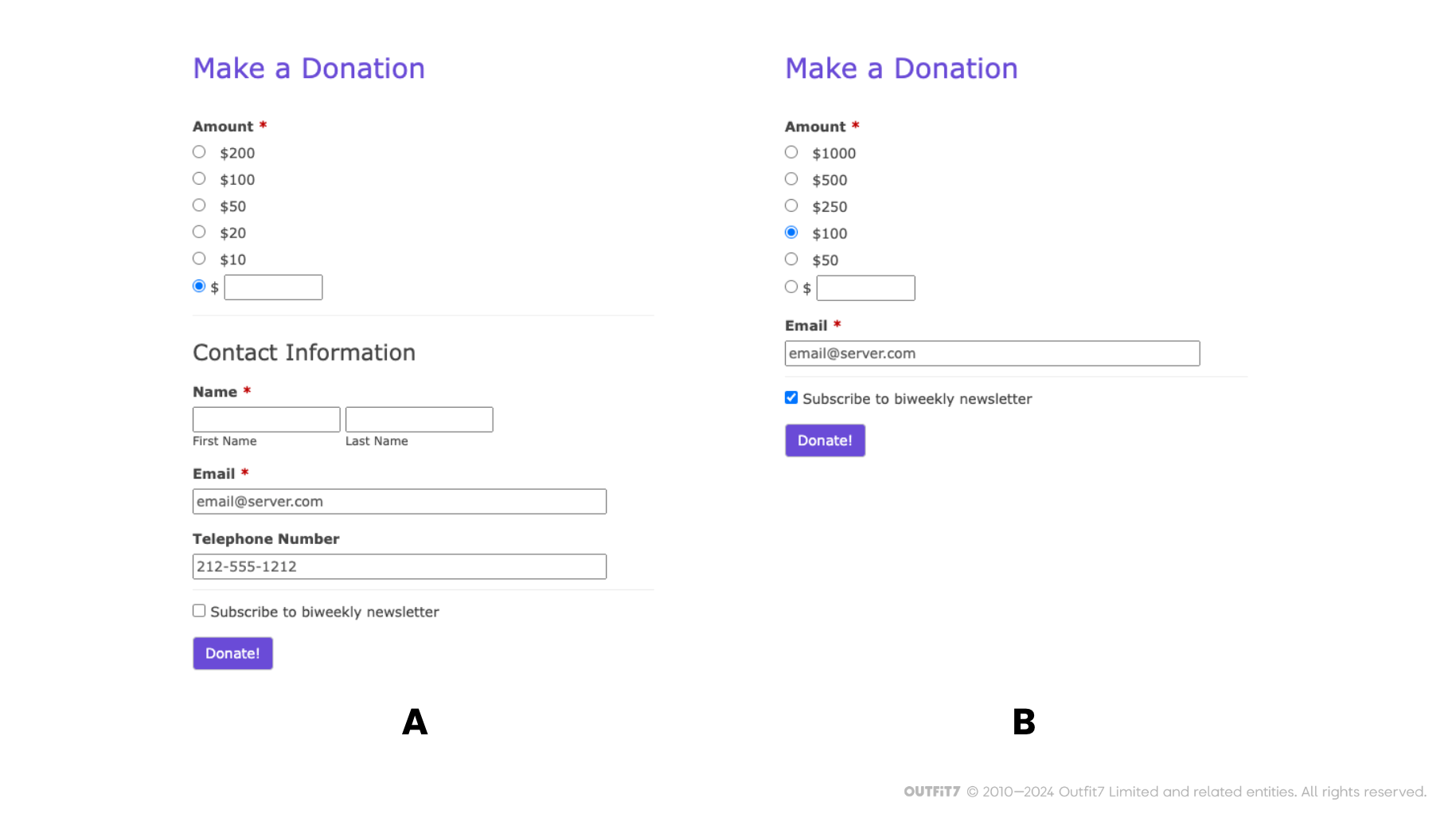

Let’s continue by taking a look at potential A/B tests for a web donation form on a pet shelter website. First, they could test removing the lengthy part of the form where users must enter contact information before donating (see variant B in the figure below). Other test options could revolve around changing the amount options; for instance removing the $10 and $20 options, adding $500 and $1000 options, and/or switching the default from custom value to $100. Similarly, a web shop can use pricing or discount tests when extending their business to new products or regions.

From the world of Outfit7, an example of an A/B test would be measuring how unlocking wardrobe items such as clothes, hats and shoes works in My Talking Angela 2 mobile game. In the original version, some items were unlocked only through progression (i.e. higher levels unlock more items). However, this limited the number of items players could use and was not monetised well. Therefore, we tested the option of unlocking items by paying for them with an in-app currency (90 gems, in the example below). In this way, players got access to more items, which became directly monetised (gems can be earned in the game or bought through in-app purchase). We tested multiple variants depending on which currency was used (e.g. coins that are easier to earn than gems), how the prices were set, and whether purchased items are unlocked forever or only for a limited time.

Why we do A/B testing

There are three main goals of A/B testing: to improve the product, to improve monetisation, or to learn something new. Let’s take a look at each of them.

Improving the product

This category of A/B tests measures which variant users like best. For example, mobile games usually include tutorials that teach new players how to play. If the tutorial is too long, it becomes boring for the users that are familiar with similar games and just want to start playing. On the other hand, the players may not understand the game, feel frustrated, and quit if the tutorial is too short, not clear enough, or not available at all. Instead of guessing, we can use an A/B test (in addition to careful game design) in order to optimize the tutorial by comparing several variants: no tutorial, short tutorial, in-depth tutorial, or a tutorial split in pieces and shown gradually as the player explores the game.

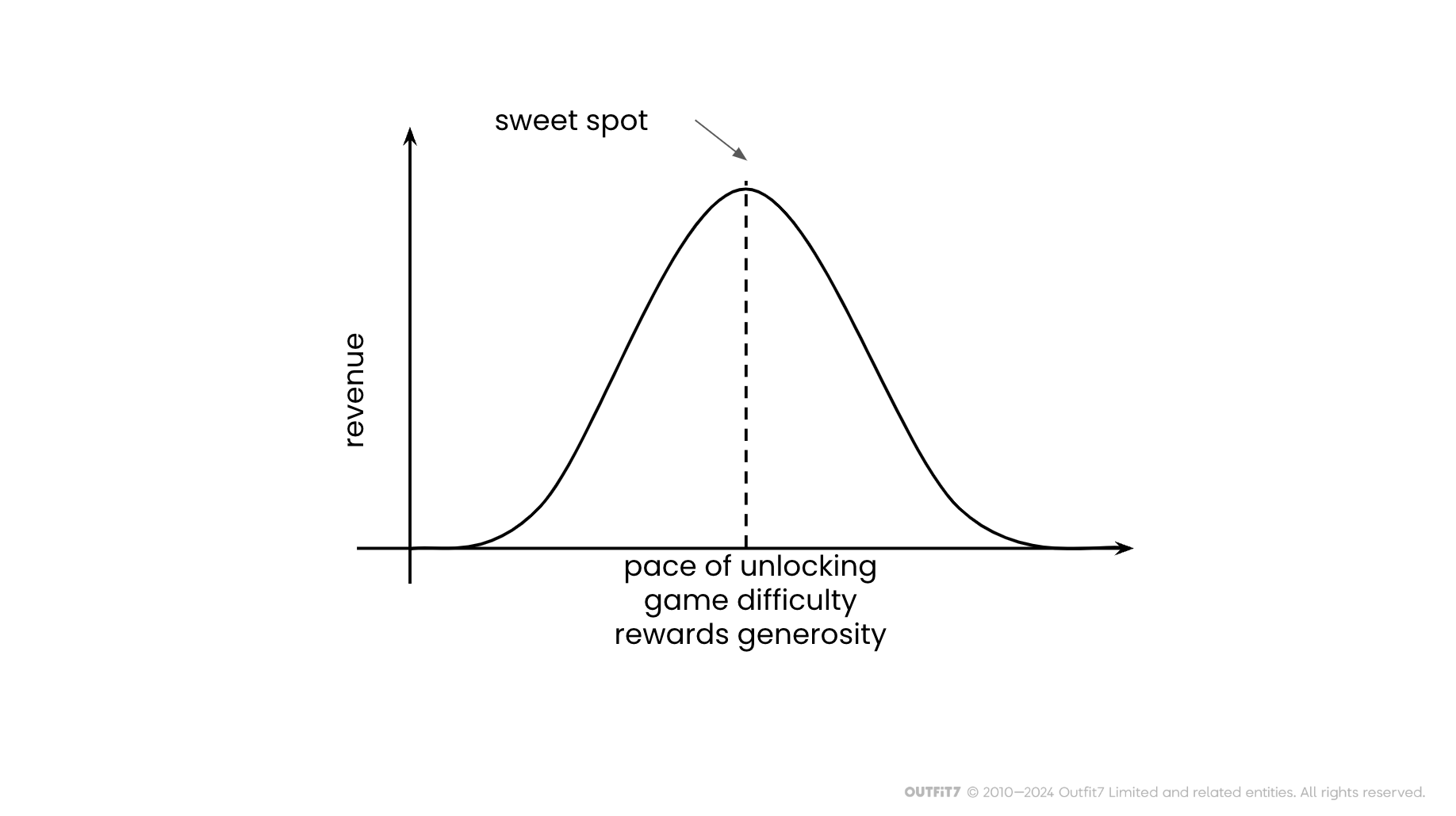

Similarly, there are often several product parameters that require careful tuning. In the case of video games, A/B tests can be used to find a sweet spot for setting the difficulty of a game,the pace of progression and unlocking of new features and game items, rewards generosity and general in-game economy.

Improving monetization

Better product usually leads to higher profit;, however, A/B tests can also be used to optimize monetisation directly without changing the product itself. The obvious example is price setting: either globally, for a particular market, or relative to product tier (e.g. basic vs. regular vs. pro). Another example is optimizing special offers: how much of a discount to offer, when, to whom, for which products, and how to advertise them. With regards to game monetisation, the following parameters can be tested: frequency of ads shown in the game, floor prices (the lowest price an advertising space is sold for), ad mediation algorithms or providers (i.e. service that sells ad space on behalf of game publisher to the highest bidder), prices of in-app purchases and so on.

Testing to learn

Usually, only variants that are believed to improve the product or its monetisation are tested, but in order to learn about the product or its users, we sometimes test variants that we’d normally never offer. Let’s look at two examples.

In Talking Tom Hero Dash, players control a running character, avoiding obstacles and collecting rewards. In addition to running, players occasionally get to use a spaceship that enables the character to temporarily fly and shoot. We already had three spaceships available and were planning to add a fourth. Before launching it, we decided to do an A/B test, because the cost of developing a new spaceship is relatively high, and we suspected that adding more spaceships might have diminishing returns.

We designed a test with eight groups in order to learn as much as possible. Group A had no spaceship; groups B, C, D had one spaceship each; groups E, F, G had two, and group H had all three spaceships available. Effectively, we removed a part of the game, making it worse for a limited time for some players. But this allowed us to measure several things:

how much game performance was improved by adding the three spaceships (was it worth investing in?)

which of the three spaceships performed the best (which one do players like the most?)

how big the differences between spaceships really were (are we consistently making good spaceships?)

how much game performance improved by adding an additional gadget (does it make sense to invest into adding the fourth gadget?)

The results of the test taught us a lot. Based on the results, we decided to invest in new game features instead of adding another spaceship.

In the second test we went even more extreme. Since most of our games are casual and for the global audience, we try to keep application size small. This enables quick downloads from app stores, reduces the cost of mobile data transfer for our players and enables players with low end devices to enjoy our games. However, it requires significant development effort and limits the art team in what they can do. Multiply that effort by the number of games that we develop and maintain and you get a team that could be working on a new game prototype with the time they’re spending on keeping the app size small.

We wanted to know whether it was worth our effort to keep app size small, even given that players using modern phones and mobile networks might not even notice the difference. To get to a definitive answer, we picked one of our games with a lower number of daily users and revenue (to limit the potential negative impact of the test) and a country with a big population, but many low-end devices. We released an update that had a big increase in app size to determine the impact. We planned to run the test for a couple of weeks, but the results were so obvious that we stopped the test after a couple of days. Installs plummeted and we got an enormous amount of app uninstalls: a clear indicator that our audience does not accept big app sizes.

Canary testing

Last but not least, A/B test infrastructure and methodology can be repurposed for canary testing. With this type of test, a new version of the product is initially released to a small subset of the user base (e.g. 1% or 5%) and the impact on business performance, as well as on system infrastructure (e.g. app crash rate, server response times, CPU and memory utilization of server nodes), is monitored. This mitigates the risk of releasing a faulty product by limiting the number of potentially affected users and gives opportunity for debugging and tuning the new version. If there are no issues discovered, the share of users with access to the new version of the product is gradually increased until all users start using the new version.

Stay tuned for part 2, where I will dive deep into how we do A/B testing at Outfit7.